As simple as it may sound, the decision on the release schedule of tokens is anything but that. It's a strategic choice that can have significant consequences. A well-thought-out token release schedule can prevent market flooding, encourage steady growth, and foster trust in the project. Conversely, a poorly designed schedule may lead to rapid devaluation or loss of investor confidence.

In this article, we will explore the various token release schedules that blockchain projects may adopt. Each type comes with its own set of characteristics, challenges, and strategic benefits. From the straightforwardness of linear schedules to the incentive-driven dynamic releases, understanding these mechanisms is crucial for all crypto founders.

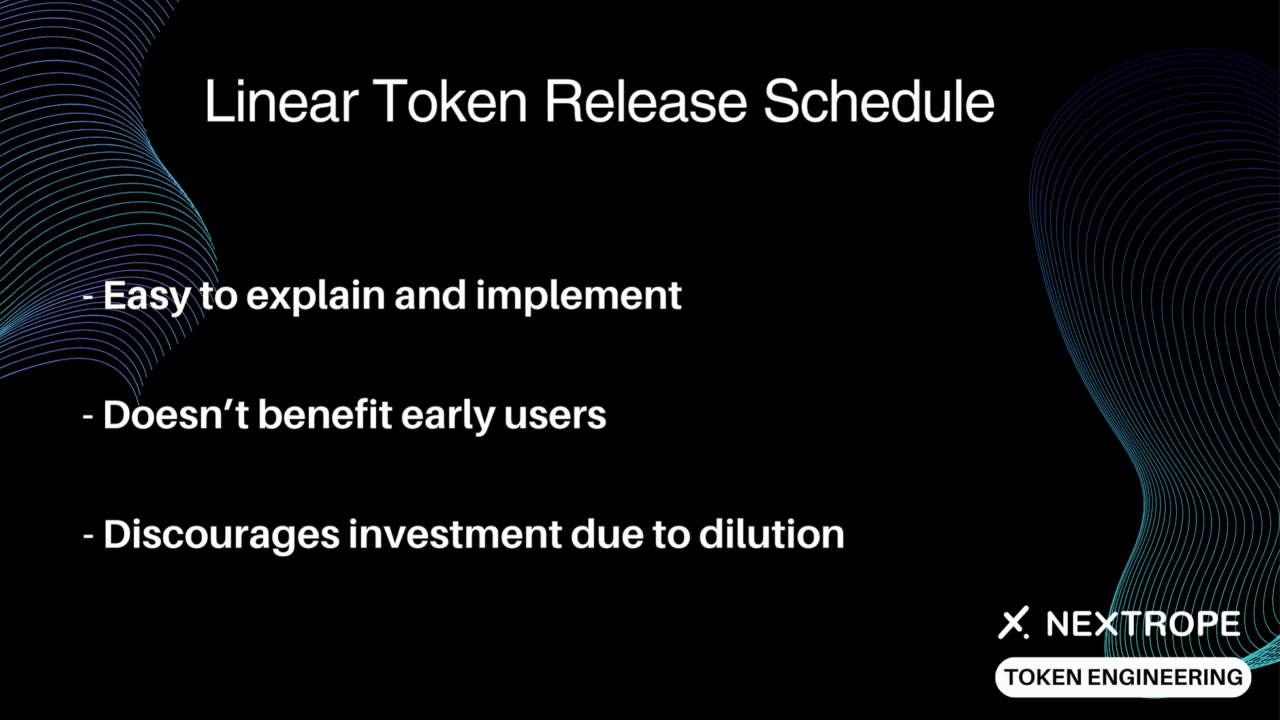

Linear Token Release Schedule

The linear token release schedule is perhaps the most straightforward approach to token distribution. As the name suggests, tokens are released at a constant rate over a specified period until all tokens are fully vested. This approach is favored for its simplicity and ease of understanding, which can be an attractive feature for investors and project teams alike.

Characteristics

- Predictability: The linear model provides a clear and predictable schedule that stakeholders can rely on. This transparency is often appreciated as it removes any uncertainty regarding when tokens will be available.

- Implementation Simplicity: With no complex rules or conditions, a linear release schedule is relatively easy to implement and manage. It avoids the need for intricate smart contract programming or ongoing adjustments.

- Neutral Incentives: There is no explicit incentive for early investment or late participation. Each stakeholder is treated equally, regardless of when they enter the project. This can be perceived as a fair distribution method, as it does not disproportionately reward any particular group.

Implications

- Capital Dilution Risk: Since tokens are released continuously at the same rate, there's a potential risk that the influx of new tokens into the market could dilute the value, particularly if demand doesn't keep pace with the supply.

- Attracting Continuous Capital Inflow: A linear schedule may face challenges in attracting new investors over time. Without the incentive of increasing rewards or scarcity over time, sustaining investor interest solely based on project performance can be a test of the project's inherent value and market demand.

- Neutral Impact on Project Commitment: The lack of timing-based incentives means that commitment to the project may not be influenced by the release schedule. The focus is instead placed on the project's progress and delivery on its roadmap.

In summary, a linear token release schedule offers a no-frills, equal-footing approach to token distribution. While its simplicity is a strength, it can also be a limitation, lacking the strategic incentives that other models offer. In the next sections, we will compare this to other, more dynamic schedules that aim to provide additional strategic advantages.

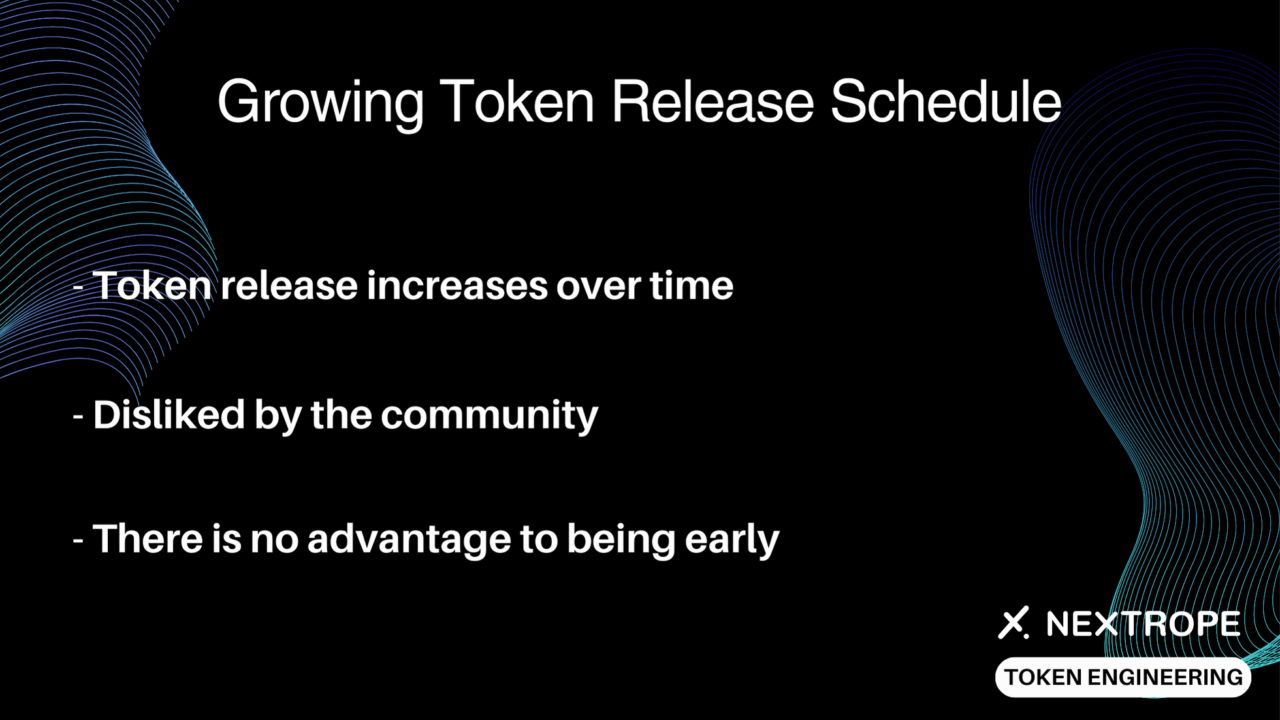

Growing Token Release Schedule

A growing token release schedule turns the dial up on token distribution as time progresses. This schedule is designed to increase the number of tokens released to the market or to stakeholders with each passing period. This approach can often be associated with incentivizing the sustained growth of the project by rewarding long-term holders.

Characteristics

- Incentivized Patience: A growing token release schedule encourages stakeholders to remain invested in the project for longer periods, as the reward increases over time. This can be particularly appealing to long-term investors who are looking to maximize their gains.

- Community Reaction: Such a schedule may draw criticism from those who prefer immediate, high rewards and may be viewed as unfairly penalizing early adopters who receive fewer tokens compared to those who join later. The challenge is to balance the narrative to maintain community support.

- Delayed Advantage: There is a delayed gratification aspect to this schedule. Early investors might not see an immediate substantial benefit, but they are part of a strategy that aims to increase value over time, aligning with the project’s growth.

Implications

- Sustained Capital Inflow: By offering higher rewards later, a project can potentially sustain and even increase its capital inflow as the project matures. This can be especially useful in supporting long-term development and operational goals.

- Potential for Late-Stage Interest: As the reward for holding tokens grows over time, it may attract new investors down the line, drawn by the prospect of higher yields. This can help to maintain a steady interest in the project throughout its lifecycle.

- Balancing Perception and Reality: Managing the community's expectations is vital. The notion that early participants are at a disadvantage must be addressed through clear communication about the long-term vision and benefits.

In contrast to a linear schedule, a growing token release schedule adds a strategic twist that favors the longevity of stakeholder engagement. It's a model that can create a solid foundation for future growth but requires careful communication and management to keep stakeholders satisfied. Up next, we will look at the shrinking token release schedule, which applies an opposite approach to distribution.

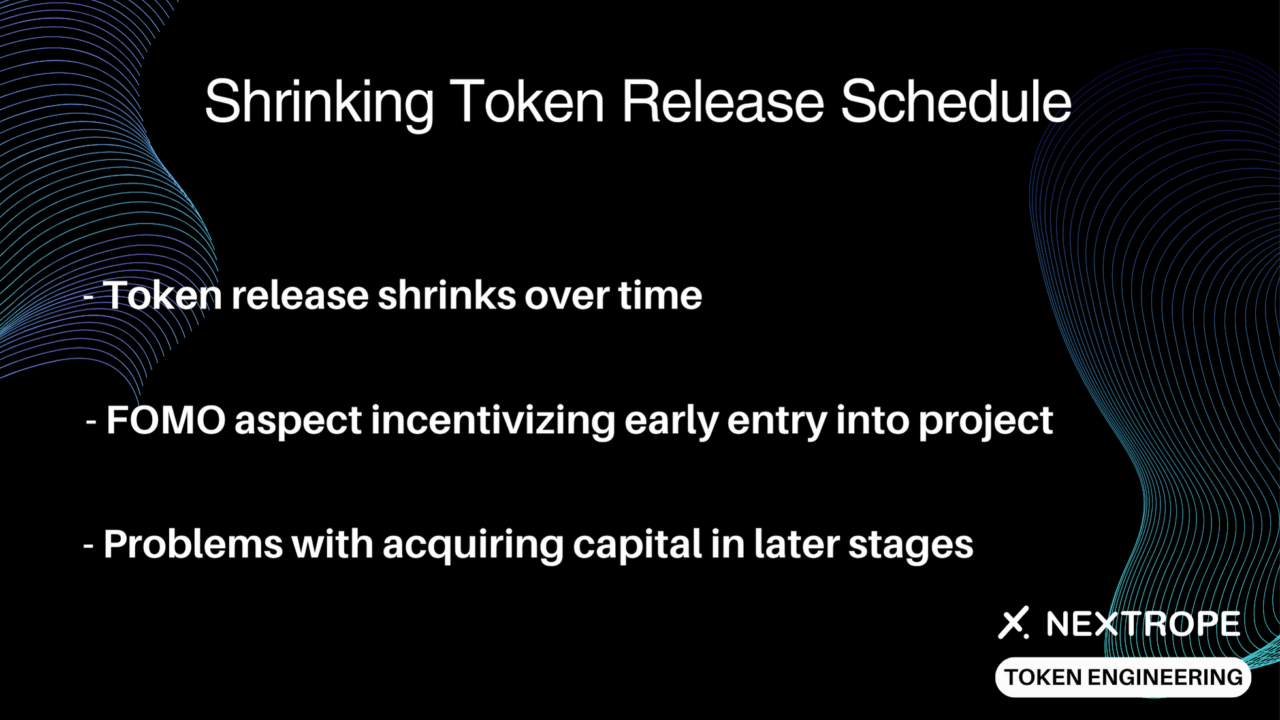

Shrinking Token Release Schedule

The shrinking token release schedule is characterized by a decrease in the number of tokens released as time goes on. This type of schedule is intended to create a sense of urgency and reward early participants with higher initial payouts.

Characteristics

- Early Bird Incentives: The shrinking schedule is crafted to reward the earliest adopters the most, offering them a larger share of tokens initially. This creates a compelling case for getting involved early in the project's lifecycle.

- Fear of Missing Out (FOMO): This approach capitalizes on the FOMO effect, incentivizing potential investors to buy in early to maximize their rewards before the release rate decreases.

- Decreased Inflation Over Time: As fewer tokens are released into circulation later on, the potential inflationary pressure on the token's value is reduced. This can be an attractive feature for investors concerned about long-term value erosion.

Implications

- Stimulating Early Adoption: By offering more tokens earlier, projects may see a surge in initial capital inflow, providing the necessary funds to kickstart development and fuel early-stage growth.

- Risk of Decreased Late-Stage Incentives: As the reward diminishes over time, there's a risk that new investors may be less inclined to participate, potentially impacting the project's ability to attract capital in its later stages.

- Market Perception and Price Dynamics: The market must understand that the shrinking release rate is a deliberate strategy to encourage early investment and sustain the token's value over time. However, this can lead to challenges in maintaining interest as the release rate slows, requiring additional value propositions.

A shrinking token release schedule offers an interesting dynamic for projects seeking to capitalize on early market excitement. While it can generate significant early support, the challenge lies in maintaining momentum as the reward potential decreases. This necessitates a robust project foundation and continued delivery of milestones to retain stakeholder interest.

Dynamic Token Release Schedule

A dynamic token release schedule represents a flexible and adaptive approach to token distribution. Unlike static models, this schedule can adjust the rate of token release based on specific criteria. Example criteria are: project’s milestones, market conditions, or the behavior of token holders. This responsiveness is designed to offer a balanced strategy that can react to the project's needs in real-time.

Characteristics

- Adaptability: The most significant advantage of a dynamic schedule is its ability to adapt to changing circumstances. This can include varying the release rate to match market demand, project development stages, or other critical factors.

- Risk Management: By adjusting the flow of tokens in response to market conditions, a dynamic schedule can help mitigate certain risks. For example: inflation, token price volatility, and the impact of market manipulation.

- Stakeholder Alignment: This schedule can be structured to align incentives with the project's goals. This means rewarding behaviors that contribute to project's longevity, such as holding tokens for certain periods or participating in governance.

Implications

- Balancing Supply and Demand: A dynamic token release can fine-tune the supply to match demand, aiming to stabilize the token price. This can be particularly effective in avoiding the boom-and-bust cycles that plague many cryptocurrency projects.

- Investor Engagement: The flexibility of a dynamic schedule keeps investors engaged, as the potential for reward can change in line with project milestones and success markers, maintaining a sense of involvement and investment in the project’s progression.

- Complexity and Communication: The intricate nature of a dynamic schedule requires clear and transparent communication with stakeholders to ensure understanding of the system. The complexity also demands robust technical implementation to execute the varying release strategies effectively.

Dynamic token release schedule is a sophisticated tool that, when used judiciously, offers great flexibility in navigating unpredictable crypto markets. It requires a careful balance of anticipation, reaction, and communication but also gives opportunity to foster project’s growth.

Conclusion

A linear token release schedule is the epitome of simplicity and fairness, offering a steady and predictable path. The growing schedule promotes long-term investment and project loyalty, potentially leading to sustained growth. In contrast, the shrinking schedule seeks to capitalize on the enthusiasm of early adopters, fostering a vibrant initial ecosystem. Lastly, the dynamic schedule stands out for its intelligent adaptability, aiming to strike a balance between various stakeholder interests and market forces.

The choice of token release schedule should not be made in isolation; it must consider the project's goals, the nature of its community, the volatility of the market, and the overarching vision of the creators.

FAQ

What are the different token release schedules?

- Linear, growing, shrinking, and dynamic schedules.

How does a linear token release schedule work?

- Releases tokens at a constant rate over a specified period.

What is the goal of a shrinking token release schedule?

- Rewards early adopters with more tokens and decreases over time.

en

en  pl

pl