Decentralization and token-based economics are concepts that have started to reach far beyond the Blockchain industry. Web 3.0 - check about what the world’s biggest tech and venture capital companies are talking about today.

Read about:

- Web 2.0

- Semantic web

- Decentralized web

- AI and web 3.0

- Change of user experience

Web 2.0 - How does the World Wide Web work today?

If you wonder which technology benefits from over 3 billion users, here is the answer: the World Wide Web. Today it’s difficult to imagine the modern world without it, even for people who remember times before its creation. This technology changed and defines how we share, create and consume information. It's present in every industry, shaping the way we work, learn and play - for many the internet became the central point of their lifestyle.

Web 1.0 and web 2.0

Essentially terms web 1.0 and web 2.0 refer to time periods in the web's evolution as it evolved through different formats and technologies.

Web 1.0, also known as Static Web, was the first version of the World Wide Web created in the 1990s. Back then user interaction wasn't a thing and searching for information was extremely inconvenient for internet users, because of the lack of search engines.

Thanks to more advanced web technologies, such as Javascript or CSS, web 2.0 made the internet far more interactive. From that moment social networks and interactive platforms have been flourishing.

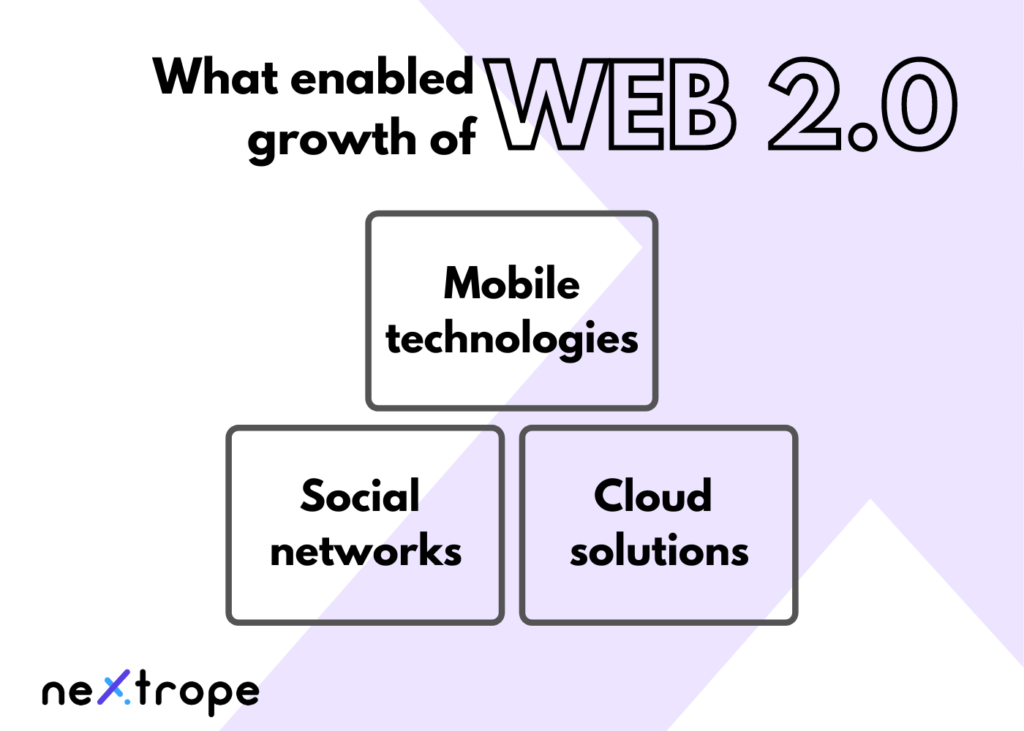

Growth of the web 2.0 was largely driven by 3 factors:

- mobile technology

- social networks

- cloud solutions

Mobile technologies

Smartphones creation resulting in mobile internet access drastically increased both the number of web users and time of its usage. Since then we’ve started living in an always-connected state. Reaching your pocket - that’s all it takes to get access to the web.

Social Network

Meta isn’t the 11th most-valuable company for no reason. Before Facebook or Myspace, the internet was largely anonymous with limited interactions between users. Social media platforms brought revolutionary possibilities. User-generated content, sharing, and commenting disrupted the information circulation.

What’s more, our internet persona became an extension of the real one. Thus, not only did social life partly move to the web, but we started to trust each other there, having tools that to some extent enable us to verify each other's identity. Without it, the success of companies such as Airbnb or Uber would never be possible.

Cloud solutions

This article was created, reviewed, and edited using Google docs - a part of the cloud solution provided by Google, that most of the readers are probably familiar with.

Cloud providers redefined how we store and share the data. It is the cloud that enables the creation and maintenance of most web pages and applications we know today. Companies were able to move from possessing expensive infrastructure to renting data storage, tools, or even computing power from dedicated companies.

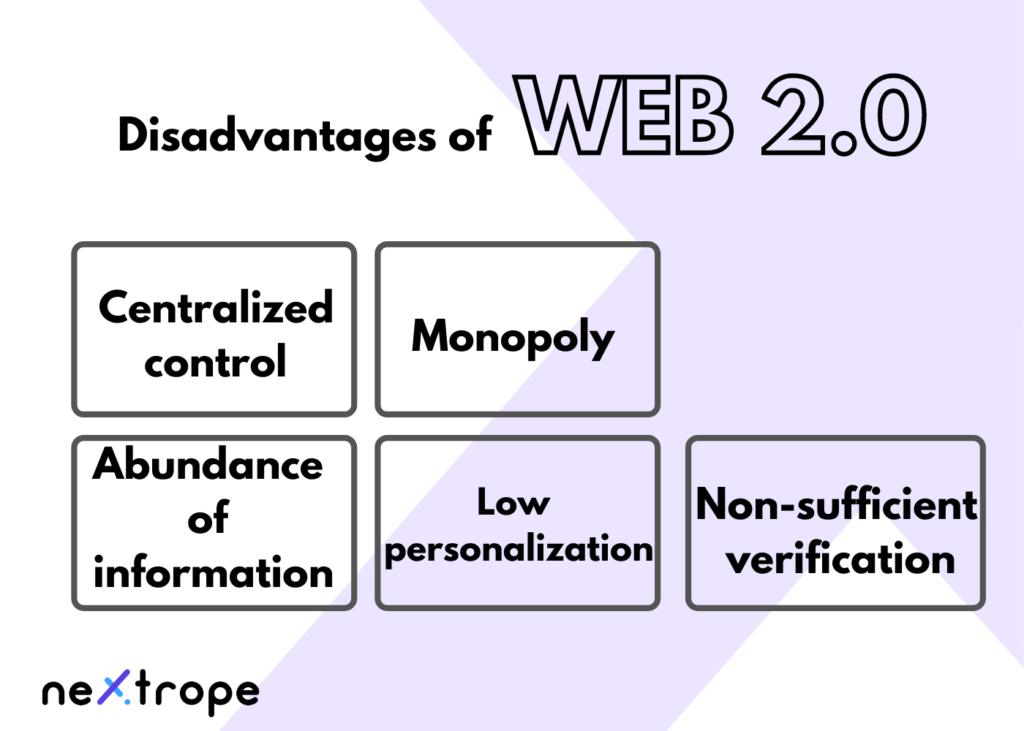

Disadvantages of Web 2.0

Web 2.0 definitely shapes how the current society functions, giving us possibilities we couldn’t even dream about before. Yet, it's not free from disadvantages.

- centralization

- abundance of information

- non-sufficient verification

- monopolization

- low personalization

With more and more issues that we’re grappling with, one question has become inevitable: What will be next?

Semantic Web

The semantic web is a concept formulated in 1999 by Tim Berners Lee, the World Wide Web creator:

I have a dream for the Web [in which computers] become capable of analyzing all the data on the Web – the content, links, and transactions between people and computers. A "Semantic Web", which makes this possible, has yet to emerge, but when it does, the day-to-day mechanisms of trade, bureaucracy, and our daily lives will be handled by machines talking to machines. The "intelligent agents" people have touted for ages will finally materialize.

The vision of an intelligent internet that can understand the users and work without external governance back then was far from being realistic. Yet, today, with new technologies that we’ve developed, it may become reality sooner than we could ever predict. This is the moment to introduce you to the phenomenon of web 3.0.

An original concept of Web 3.0 was coined by Gavin Wood, Ethereum, and Polkadot creator, somewhere around 2019, that refers to a "decentralized online ecosystem based on blockchain." The idea of the web which instead of using centralized servers relies on scattered nodes quickly gained a significant number of supporters.

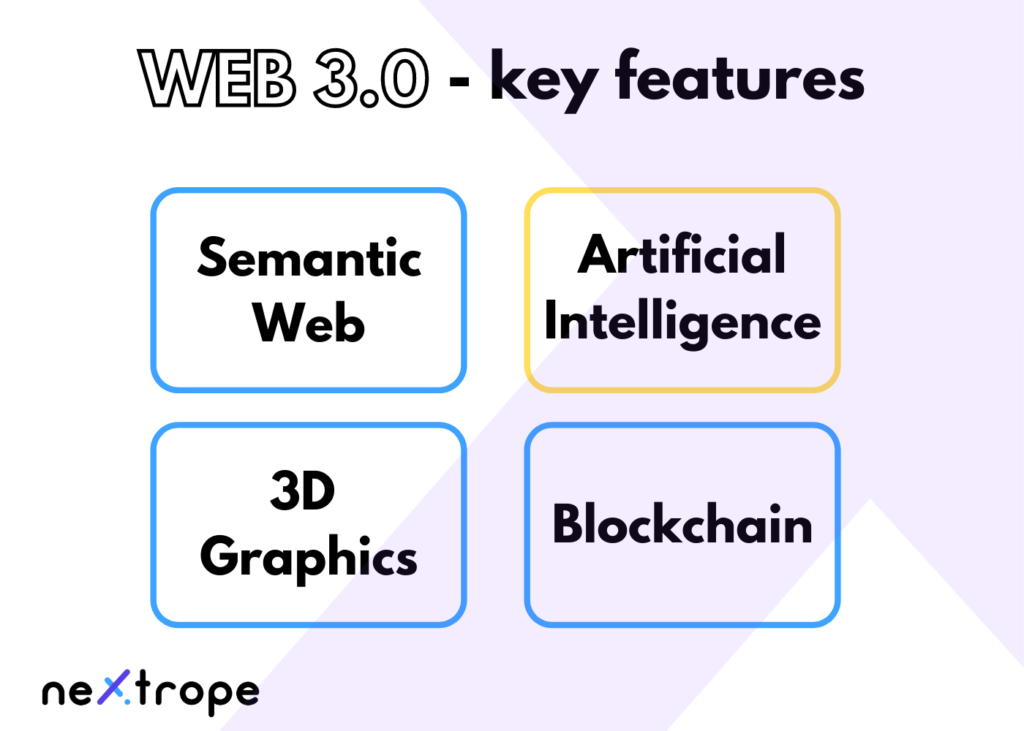

Web 3.0 - key features

- Semantic Web

- Artificial Intelligence

- Decentralization

- 3D Graphics

Semantic web and web 3.0

In the semantic web, computers are able to analyze data with an understanding of its content, including text, transactions, and connections between users or events. In such systems, machines are able to accurately read our emotions, feelings, and intentions just by analyzing our input. Applying it would greatly increase data connectivity, and in consequence, provide a better experience to the web users.

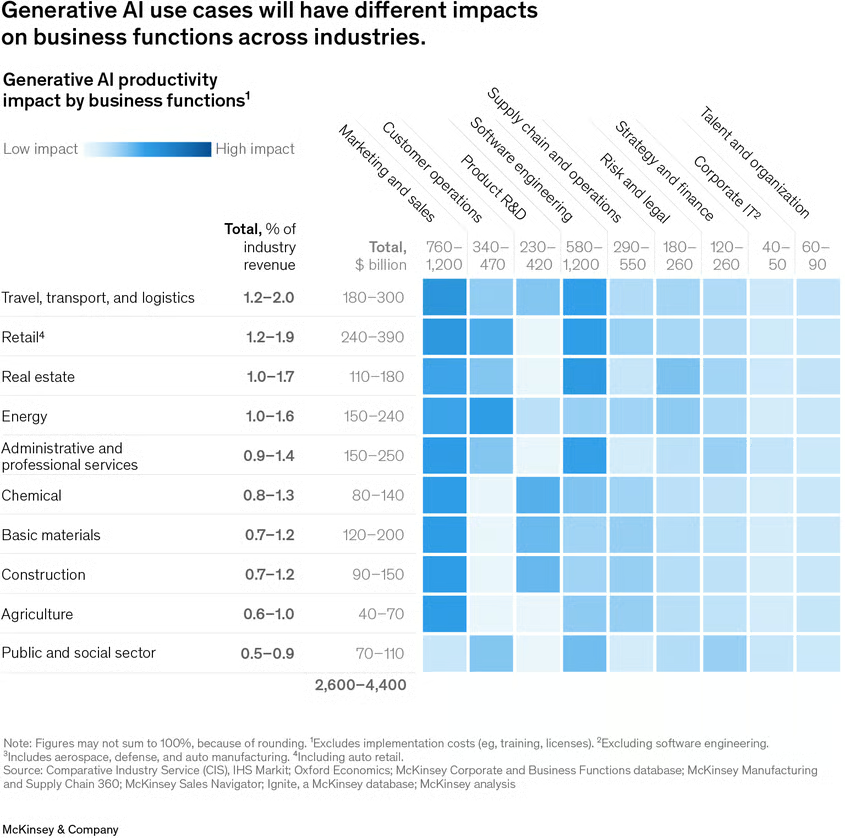

Artificial intelligence

Machine learning and artificial intelligence are key technologies for web 3.0. Currently, Web 2.0 already presents some semantic capabilities, but they are in fact most human-based. Therefore it is prone to biases and manipulations.

Let’s take online reviews as an example. Today, any company can simply gather a large number of users and pay them to write a positive review of their product or service. Implementing AI, that would be able to distinguish fake from real, would increase the reliability of data available online.

Essentially, AI and machine learning will not only enable computers to decode meanings contained in data but also provide a more personalized experience to web 3.0 users. Online platforms will be able to tailor their appearance or content to an individual web user. This will bring a revolutionary change to the e-commerce sector as targeted advertising will become routine.

3D graphics

According to some theories, with the introduction of web 3.0 borders between the real and digital world will begin to fade. The constant development of graphic technologies may even enable us to create whole 3D virtual worlds in web 3.0.

This concept is closely related to another issue that recently has gained significant popularity: metaverse. 3D graphics in web 3.0 will revolutionize sectors such as gaming, e-commerce, healthcare, and real estate.

Decentralized web

Current web infrastructure is based on data stored in centralized locations - single servers. That can potentially make it prone to manipulations or attacks. Furthermore, most of the databases are controlled by a limited number of companies such as Meta or Google. Web 3.0 aims to change that by introducing decentralized networks.

In web 3.0 data will be stored in multiple locations - nodes. Any change of data will have to be authorized by every node in the infrastructure. The exchange of information will be taking place in peer-to-peer networks. It will not only take the data from the central authority but also make it more immune.

Digital assets in 3.0

Web 3.0 is expected to bring a totally new approach to digital assets. Tokens economy based on blockchain technology will become an even more common phenomenon.

Even today we can observe how blockchain technology is shaping the exchange of goods, investments, or ownership rights. Fungible and nonfungible tokens constantly find new applications that provide users with groundbreaking possibilities in industries such as gaming, real estate, or even healthcare.

On the internet of future ownership, control will become an even more vital issue. Blockchain technologies, and NFTs to be more precise can bring significant improvement in this area. What if assets, such as digital art or virtual land plots, were already carrying data about their owners and creators? Data that would be impossible to manipulate because it will be stored and confirmed in distributed ledgers.

What will change for web pages with web 3.0

Where web 3.0 will take us? According to many experts, we shouldn't treat web 3.0 as a totally new internet. It's just another stage of its evolution. Some of the solutions on which web 3.0 will be based already exist and function. In many cases, it's just about the scale.

Yet, the new web will definitely make a place for revolutionary business models. Personalized web pages or shops in 3D virtual spaces are just some examples of new possibilities that web 3.0 will form.

en

en  pl

pl